mimi WebSocket API Service

1. Client Requirements

1.1. Hardware / Device

The microphone SHOULD exhibit approximately flat amplitude versus frequency characteristics; specifically, ±3 dB, from 100 Hz to 4000 Hz

Total harmonic distortion SHOULD be less than 1% for 1k Hz at 90 dB SPL input level.

Noise reduction processing with monaural microphone, if present, MUST be disabled. If the device has multiple microphones, contact us.

Automatic gain control, if present, MUST be disabled.

1.2. Software

The client must support WebSocket protocol (RFC6455).

Note:

- mimi server does not support all WebSocket specification. See note in Section 3.1.3.

2. Authentication

2.1 mimi OAuth Overview

The mimi service provides OAuth authentication service for the customers. The mimi authentication server is authorized customer’s authentication server with shared secret credentials in advance.

The clients will request to update their temporal token to customer’s authentication server periodically. The customer’s authentication server will pass the request to the mimi authentication server.

The mimi authentication server publishes a temporal token (aka. access token) to the customer’s authentication server. The clients are authorized by the temporal token to use mimi service.

The detailed information about the mimi OAuth service, please contact to us.

3. Overview of request to mimi

3.1 Overview of mimi Client Request

The mimi server receives an incoming audio stream from an WebSocket client with binary frames and provides the client with a recognition result, which is transcription of what was spoken in mimi ASR, speaker id or type of the speaker in SRS, audio category in AIR, and so on.

As well, the server receives a command from the client with text frames which is used by the client to initializes and/or controls the service.

This chapter describes the overview of the whole communication procedure with 4 parts. Establishing WebSocket connection, Sending special commands on WebSocket using text-frame, Sending audio on WebSocket using binary-frame, and Getting result on WebSocket.

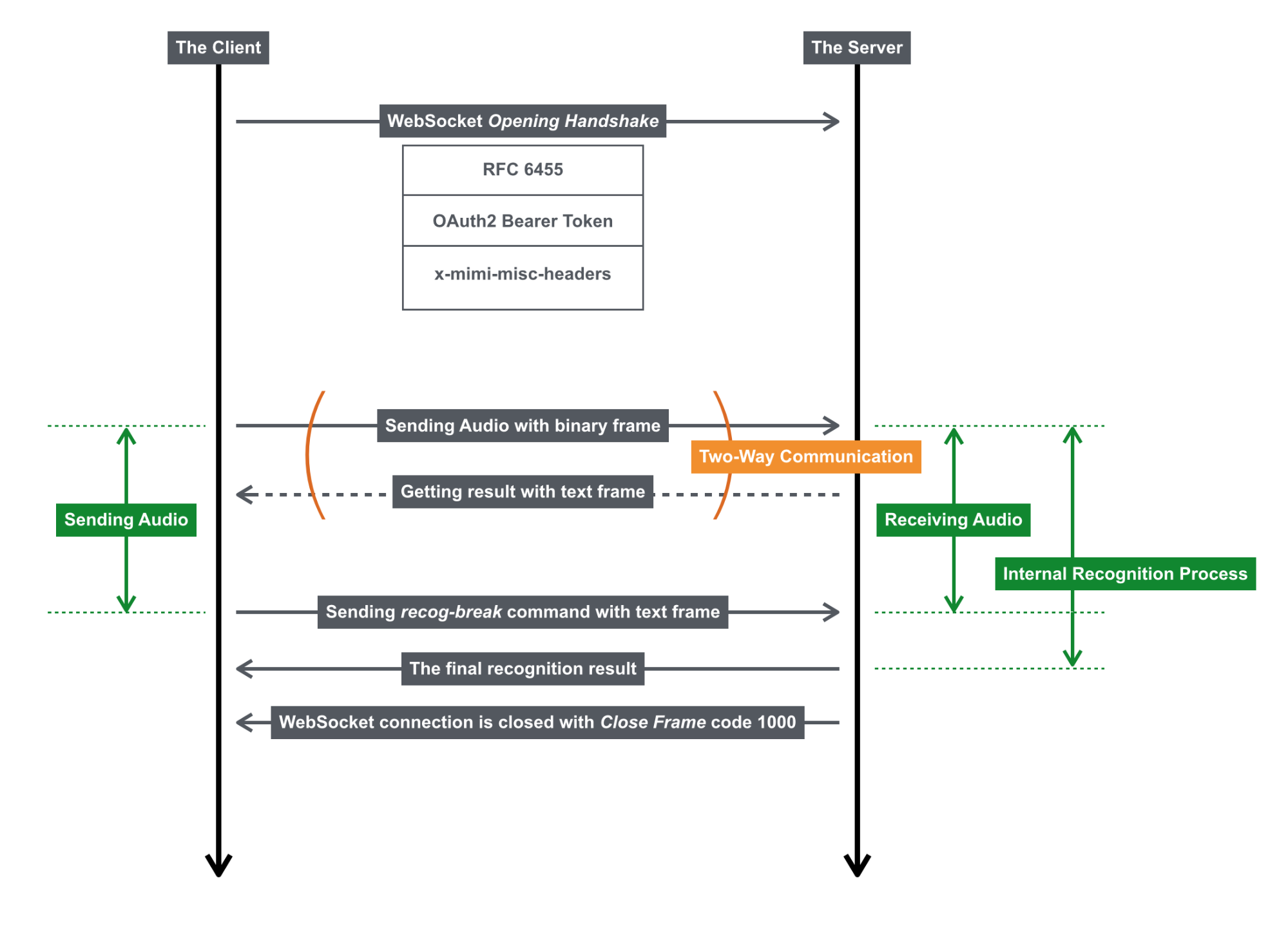

Fig. 3.1.A WebSocket communication overview

The left line shows the client-side timeline and the right line shows the server-side timeline. The green arrow shows the range for Sending Audio, Receiving Audio and Internal Recognition Process. The orange bracket shows the sending/receiving on WebSocket occurs multiple times.

3.1.1 Establish WebSocket Connection

When the client establishes WebSocket connection with Opening Handshake to mimi server, the client may/must send OAuth2 bearer token for authentication, and/or can send some custom HTTP request headers as follows. Once the client establishes WebSocket connection, the client can immediately send audio with binary frame.

Authentication

The client may/must send bearer token (aka. access token acquired by access token publisher) in the opening handshake as follows;

Authorization: Bearer XXXXXXX

Audio Codec

The client can specify audio codec with headers as follows;

X-Mimi-Content-Type: audio/x-flac;bit=16;rate=16000;channels=1

mimi remote host accepts audio/x-flac, audio/x-pcm with bit=16, rate=16000, channels=1. It CAN also accept any sampling rates and multi-channel stream up to 8ch, but it depends on your agreement and terms of mimi usage.

If you do not specify anything, the default codec is audio/x-pcm;bit=16;rate=16000;channels=1.

Note:

PCM does NOT mean .wav format ( Waveform Audio File Format ). Wav format file is a RIFF file which starts out with a file header followed by a sequence of PCM audio data chunks.

PCM means so-called raw audio file, which often has .raw extension, which is identical to .wav format without its file header. In other words, raw audio file contains PCM audio data chunks only.

Custom Log

The client can send custom log string as follows. Log string is recorded in server-side-log-storage with session_id. The maximum length of log string is 64 KiB.

X-Mimi-User-Log-Context: XXXXXXX

Other optional headers for mimi AFN (advanced front end)

Other optional headers are defined for each function of mimi AFN (advanced front end). See the section of mimi AFN for details.

3.1.2 Control Commands on WebSocket

As well, the server receives a command from the client with text frames which is used by the client to initializes and/or controls the service.

Table 3.1.A List of Commands on WebSocket communication

| Commands | Description |

|---|---|

| recog-init | Initialization. To configure speech recognition conditions NOT USABLE SO FAR |

| recog-break | To notify speech breakpoints. |

Detailed Description of Commands

recog-init

The recog-init command can be used by the client to notify the server to start sending audio stream. As well, it is used for configuration with the speech recognition service. For ASR service, recog-init command is not usable so far.

recog-break

The recog-break command is used by the client to notify the server the end of the audio stream. It enables the server to fix the recognition result of the previously received audio stream.

The client receives progressively a temporal recognition result as sending audio stream by frame-by-frame, which is subject to change affected by subsequent audio stream so as to be improved in reliability.

After receiving the recog-break request from the client, the server returns the final (fixed) recognition result of the previously received audio stream, terminates the previous recognition process internally, and close the WebSocket connection with sending Close frame with code 1000.

The recog-break request can only be used after sending at least one audio frame. There is no corresponding response of the recog-break request.

Here is an example of the recog-break request.

// client -> server

{

"command": "recog-break"

}

Note:

To detect the end of audio stream in the client side, you have to use VAD (Voice Activity Detector) or GSED (General Sound Event Detector) in client side. Also we provide server-side VAD or GSED for easy use of this API. See the section of mimi AFN for details.

Note:

The server will close the WebSocket connection after receiving the recog-break request. The client should respond close frame with the same status code in accordance with the RFC 6455.

3.1.3 Sending Audio on WebSocket

The client is able to send the audio stream to the server progressively with binary frames after connection is opened.

The audio format must be in accordance with the the x-mimi-content-type in the Opening Handshake.

Maximum payload length of each binary frame is 64 KiB which is not depends on sampling-rate or number of channels.

Here is an example of sending audio stream from the client to the server.

// client -> server

F8 FF FF FF 00 00 FD FF F7 FF F1 FF F3 FF F3 FF EE FF DC FF CA FF B5 FF A8 FF A4 FF B6 FF D4

Note:

Currently, mimi server does not support receiving fragmented messages (Section 5.4 of RFC 6455). The client must not send fragmented messages.

3.1.4 Getting Response on WebSocket

A response message is sent to the client with text frames, which contains status and attribute information about the completion and/or progress of the request. The status is shown in the below table.

When a non-continuable error occurs in the process of the request from the client, the server disconnects the WebSocket connection and returns a Close frame in response with an error code.

| recog-init | Completion of initialization NOT USABLE SO FAR |

| recog-in-progress | Recognition is still ongoing. The result is subject to change. |

| recog-finished | Recognition is finished. The result is fixed. |

4. mimi ASR

4.1 Overview of the service

mimi ASR service provides customers what was spoken in the audio stream.

4.2 Overview of the response

4.2.1 General LVCSR (Large Scale Continuous Speech Recognition)

Table 4.2.A Detailed Description of Response

Below table shows the keys in the typical response.

| type | It shows the recognition system name (asr) and the subsystem name. |

| session_id | It shows the unique number of the request. You can use it for logging. |

| status | It show the current status of recognition which is listed above. |

| response | The array for recognition result objects. |

| response.result | It shows the recognition result. |

| response.time | It shows the time alignment for the recognition result in milli-seconds. |

// server -> client

{

"type":"asr#mimilvcsr",

"session_id":"d55682b2-2712-11e6-8594-42010af0001a",

"status":"recog-in-progress",

"response":[

{"result":"あらゆる","pronunciation":"アラユル","time":[630,1170]},

{"result":"現実","pronunciation":"ゲンジツ","time":[1170,1790]},

{"result":"を","pronunciation":"ヲ","time":[1790,2040]}

]

}

// server -> client

{

"type":"asr#mimilvcsr",

"session_id":"d55682b2-2712-11e6-8594-42010af0001a",

"status":"recog-finished",

"response":[

{"result":"あらゆる","pronunciation":"アラユル","time":[630,1170]},

{"result":"現実","pronunciation":"ゲンジツ","time":[1170,1790]},

{"result":"を","pronunciation":"ヲ","time":[1790,2040]},

{"result":"すべて","pronunciation":"スベテ","time":[2240,2890]},

{"result":"自分","pronunciation":"ジブン","time":[2990,3360]},

{"result":"の","pronunciation":"ノ","time":[3360,3460]},

{"result":"ほう","pronunciation":"ホー","time":[3460,3720]},

{"result":"へ","pronunciation":"エ","time":[3720,3850]},

{"result":"ねじ曲げ","pronunciation":"ネジマゲ","time":[3850,4410]},

{"result":"た","pronunciation":"タ","time":[4410,4530]},

{"result":"の","pronunciation":"ノ","time":[4530,4670]},

{"result":"だ","pronunciation":"ダ","time":[4670,4930]}

]

}

Updated about 1 year ago